Let me introduce you to Philip Nitschke, also known as “Dr. Death” or “the Elon Musk of assisted suicide.”

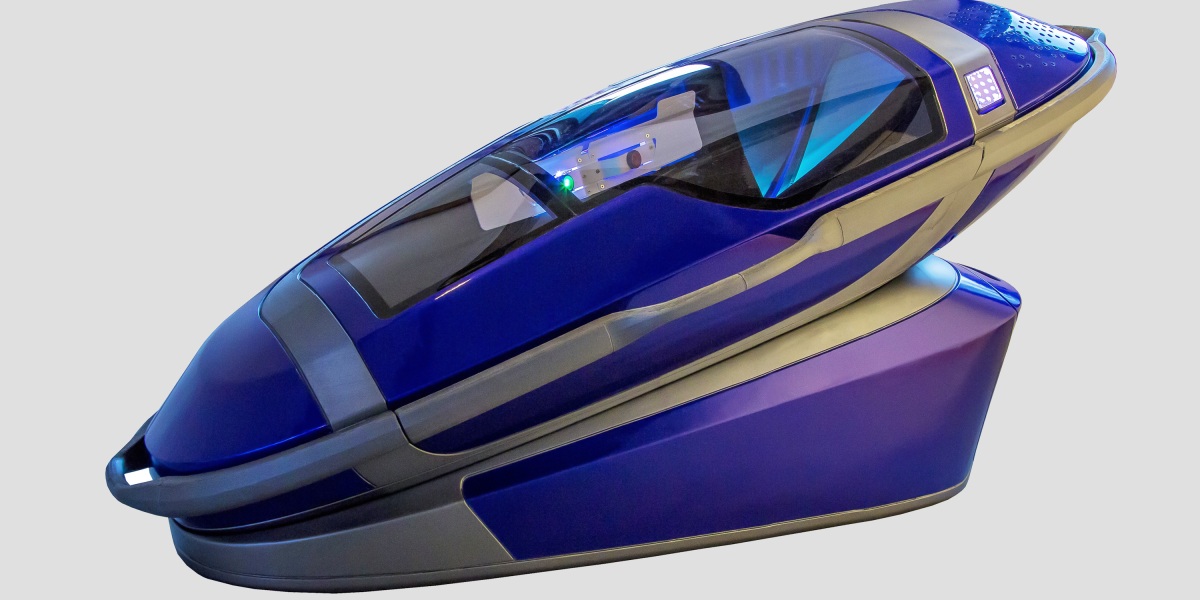

Nitschke has a curious goal: He wants to “demedicalize” death and make assisted suicide as unassisted as possible through technology. As my colleague Will Heaven <a href="https://www.technologyreview.com/2022/10/13/1060945/artificial-intelligence-life-death-decisions-hard-choices/?truid=<reports, Nitschke has developed a coffin-size machine called the Sarco. People seeking to end their lives can enter the machine after undergoing an algorithm-based psychiatric self-assessment. If they pass, the Sarco will release nitrogen gas, which asphyxiates them in minutes. A person who has chosen to die must answer three questions: Who are you? Where are you? And do you know what will happen when you press that button?

In Switzerland, where assisted suicide is legal, candidates for euthanasia must demonstrate mental capacity, which is typically assessed by a psychiatrist. But Nitschke wants to take people out of the equation entirely.

Nitschke is an extreme example. But as Will writes, AI is already being used to triage and treat patients in a growing number of health-care fields. Algorithms are becoming an increasingly important part of care, and we must try to ensure that their role is limited to medical decisions, not moral ones.

Will explores the messy morality of efforts to develop AI that can help make life-and-death decisions <a href="https://www.technologyreview.com/2022/10/13/1060945/artificial-intelligence-life-death-decisions-hard-choices/?truid=<here.

I’m probably not the only one who feels extremely uneasy about letting algorithms make decisions about whether people live or die. Nitschke’s work seems like a classic case of misplaced trust in algorithms’ capabilities. He’s trying to sidestep complicated human judgments by introducing a technology that could make supposedly “unbiased” and “objective” decisions.

That is a dangerous path, and we know where it leads. AI systems reflect the humans who build them, and they are riddled with biases. We’ve seen facial recognition systems that don’t recognize Black people and label them as criminals or gorillas. In the Netherlands, tax authorities used an algorithm to try to weed out benefits fraud, only to penalize innocent people—mostly lower-income people and members of ethnic minorities. This led to devastating consequences for thousands: bankruptcy, divorce, suicide, and children being taken into foster care.

As AI is rolled out in health care to help make some of the highest-stake decisions there are, it’s more crucial than ever to critically examine how these systems are built. Even if we manage to create a perfect algorithm with zero bias, algorithms lack the nuance and complexity to make decisions about humans and society on their own. We should carefully question how much decision-making we really want to turn over to AI. There is nothing inevitable about letting it deeper and deeper into our lives and societies. That is a choice made by humans.