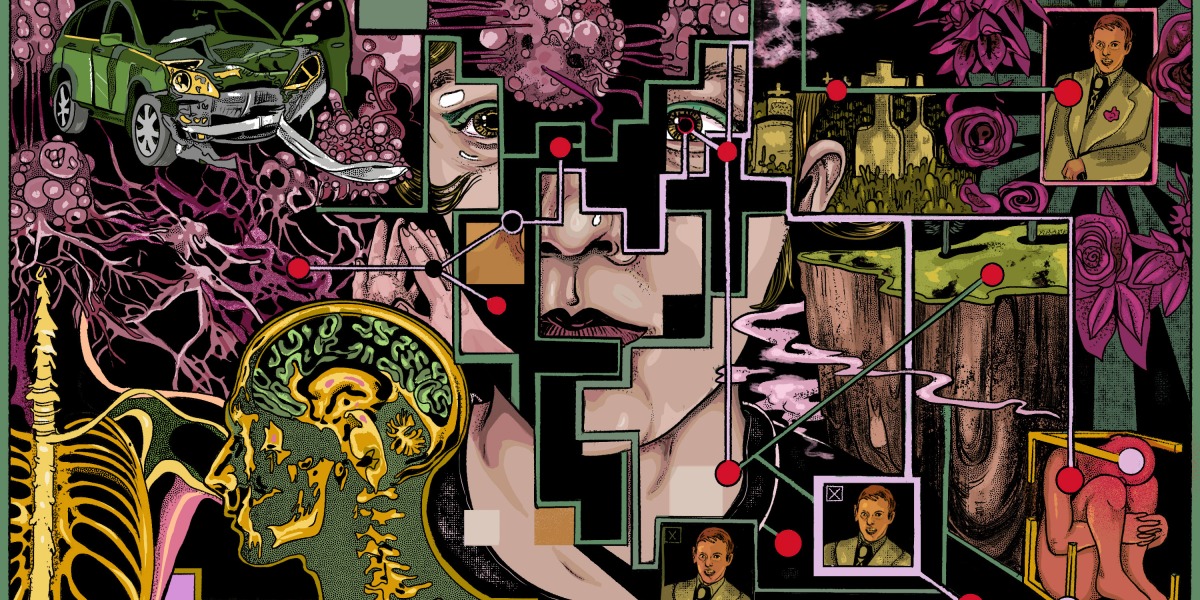

I am a mostly visual thinker, and thoughts pose as scenes in the theater of my mind. When my many supportive family members, friends, and colleagues asked how I was doing, I’d see myself on a cliff, transfixed by an omniscient fog just past its edge. I’m there on the brink, with my parents and sisters, searching for a way down. In the scene, there is no sound or urgency and I am waiting for it to swallow me. I’m searching for shapes and navigational clues, but it’s so huge and gray and boundless.

I wanted to take that fog and put it under a microscope. I started Googling the stages of grief, and books and academic research about loss, from the app on my iPhone, perusing personal disaster while I waited for coffee or watched Netflix. How will it feel? How will I manage it?

I started, intentionally and unintentionally, consuming people’s experiences of grief and tragedy through Instagram videos, various newsfeeds, and Twitter testimonials. It was as if the internet secretly teamed up with my compulsions and started indulging my own worst fantasies; the algorithms were a sort of priest, offering confession and communion.

Yet with every search and click, I inadvertently created a sticky web of digital grief. Ultimately, it would prove nearly impossible to untangle myself. My mournful digital life was preserved in amber by the pernicious personalized algorithms that had deftly observed my mental preoccupations and offered me ever more cancer and loss.

I got out—eventually. But why is it so hard to unsubscribe from and opt out of content that we don’t want, even when it’s harmful to us?

I’m well aware of the power of algorithms—I’ve written about the mental-health impact of Instagram filters, the polarizing effect of Big Tech’s infatuation with engagement, and the strange ways that advertisers target specific audiences. But in my haze of panic and searching, I initially felt that my algorithms were a force for good. (Yes, I’m calling them “my” algorithms, because while I realize the code is uniform, the output is so intensely personal that they feel like mine.) They seemed to be working with me, helping me find stories of people managing tragedy, making me feel less alone and more capable.

In my haze of panic and searching, I initially felt that my algorithms were a force for good. They seemed to be working with me, making me feel less alone and more capable.

In reality, I was intimately and intensely experiencing the effects of an advertising-driven internet, which Ethan Zuckerman, the renowned internet ethicist and professor of public policy, information, and communication at the University of Massachusetts at Amherst, famously called “the Internet’s Original Sin” in a 2014 Atlantic piece. In the story, he explained the advertising model that brings revenue to content sites that are most equipped to target the right audience at the right time and at scale. This, of course, requires “moving deeper into the world of surveillance,” he wrote. This incentive structure is now known as “surveillance capitalism.”

Understanding how exactly to maximize the engagement of each user on a platform is the formula for revenue, and it’s the foundation for the current economic model of the web.