To test its new approach, Hugging Face estimated the overall emissions for its own large language model, BLOOM, which was launched earlier this year. It was a process that involved adding up lots of different numbers: the amount of energy used to train the model on a supercomputer, the energy needed to manufacture the supercomputer’s hardware and maintain its computing infrastructure, and the energy used to run BLOOM once it had been deployed. The researchers calculated that final part using a software tool called CodeCarbon, which tracked the carbon emissions BLOOM was producing in real time over a period of 18 days.

Hugging Face estimated that BLOOM’s training led to 25 metric tons of carbon emissions. But, the researchers found, that figure doubled when they took into account the emissions produced by the manufacturing of the computer equipment used for training, the broader computing infrastructure, and the energy required to actually run BLOOM once it was trained.

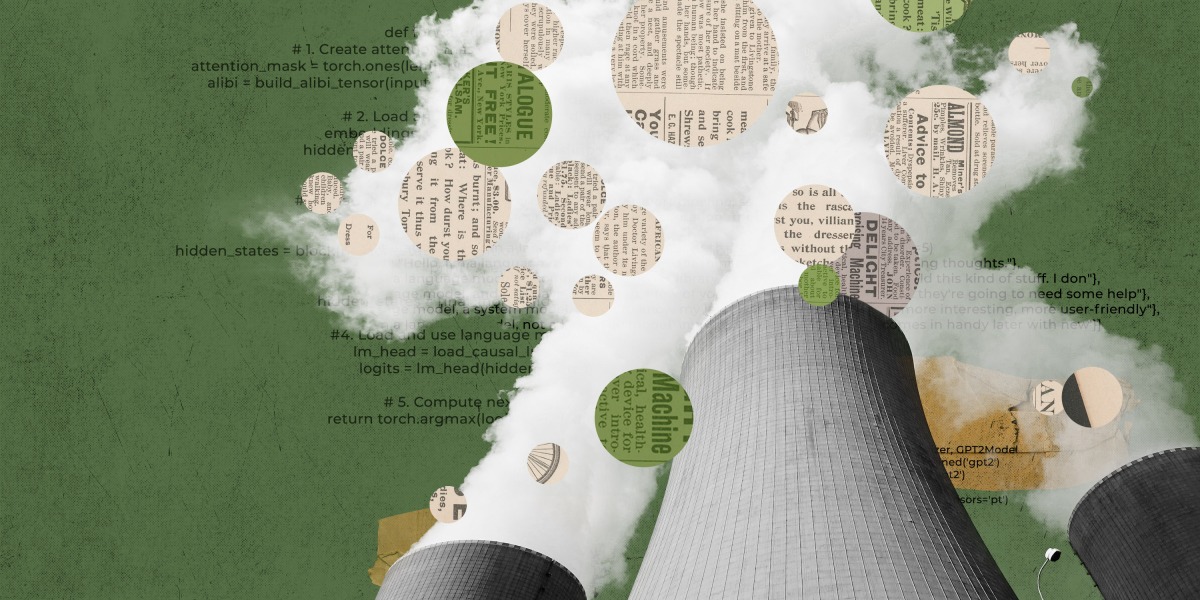

While that may seem like a lot for one model—50 metric tons of carbon emissions is the equivalent of around 60 flights between London and New York—it’s significantly less than the emissions associated with other LLMs of the same size. This is because BLOOM was trained on a French supercomputer that is mostly powered by nuclear energy, which doesn’t produce carbon emissions. Models trained in China, Australia, or some parts of the US, which have energy grids that rely more on fossil fuels, are likely to be more polluting.

After BLOOM was launched, Hugging Face estimated that using the model emitted around 19 kilograms of carbon dioxide per day, which is similar to the emissions produced by driving around 54 miles in an average new car.

By way of comparison, OpenAI’s GPT-3 and Meta’s OPT were estimated to emit more than 500 and 75 metric tons of carbon dioxide, respectively, during training. GPT-3’s vast emissions can be partly explained by the fact that it was trained on older, less efficient hardware. But it is hard to say what the figures are for certain; there is no standardized way to measure carbon emissions, and these figures are based on external estimates or, in Meta’s case, limited data the company released.

“Our goal was to go above and beyond just the carbon emissions of the electricity consumed during training and to account for a larger part of the life cycle in order to help the AI community get a better idea of the their impact on the environment and how we could begin to reduce it,” says Sasha Luccioni, a researcher at Hugging Face and the paper’s lead author.

Hugging Face’s paper sets a new standard for organizations that develop AI models, says Emma Strubell, an assistant professor in the school of computer science at Carnegie Mellon University, who wrote a seminal paper on AI’s impact on the climate in 2019. She was not involved in this new research.

The paper “represents the most thorough, honest, and knowledgeable analysis of the carbon footprint of a large ML model to date as far as I am aware, going into much more detail … than any other paper [or] report that I know of,” says Strubell.