While Biden has in the past called for stronger protections for privacy and for tech companies to stop collecting data, the US — home to some of the world’s biggest tech and AI companies — has so far been one of the only Western nations without clear guidance on how to protect its citizens against AI harms.

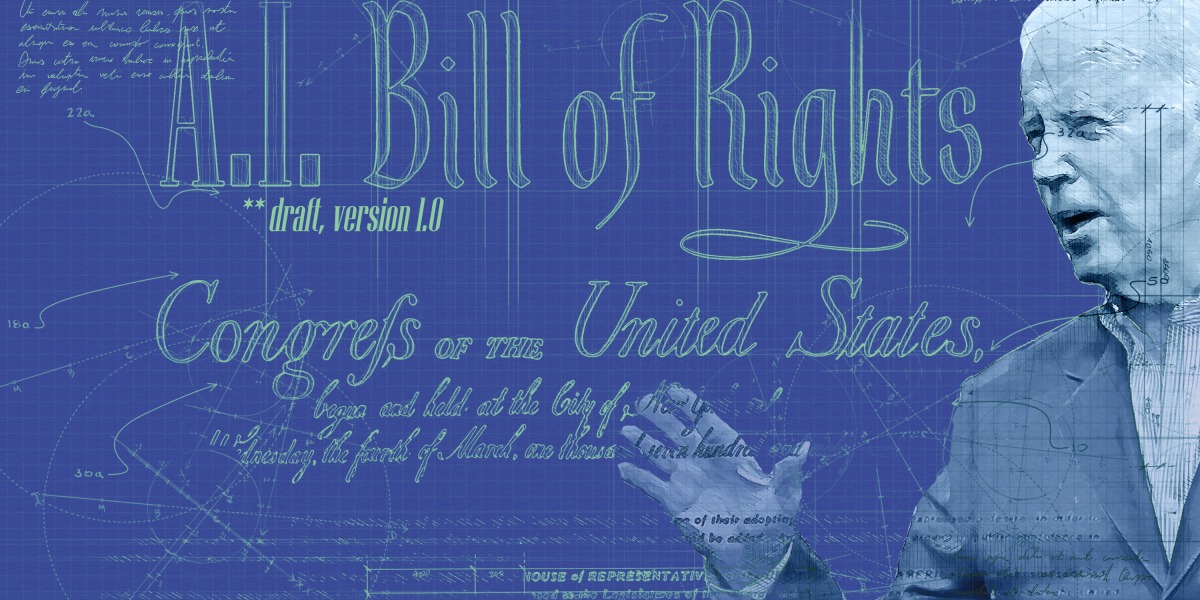

Today’s announcement is the White House’s vision of how the US government as well as technology companies and citizens should work together to hold AI accountable. However, critics say the blueprint lacks teeth, and the US needs even stronger regulation around AI.

In September, the administration announced core principles for tech accountability and reform, such as stopping discriminatory algorithmic decision-making, promoting competition in the technology sector and providing federal protections for privacy.

The AI Bill of Rights, the vision for which was first introduced a year ago by the Office of Science and Technology Policy (OSTP), a US government department that advises the President on science and technology, is a blueprint for how to achieve those goals. It provides practical guidance to government agencies, and a call to action for technology companies, researchers, and civil society to build these protections.

“These technologies are causing real harms in the lives of Americans harms that run counter to our core democratic values, including the fundamental right to privacy, freedom from discrimination and our basic dignity,” a senior administration official told reporters at a press conference.

AI is a powerful technology that is transforming our societies. It also has the potential to cause serious harm, which often disproportionately affects minorities. Facial recognition technologies used in policing and algorithms that allocate benefits are not as accurate on ethnic minorities, for example.

The Bill of Rights aims to redress that balance. It says that Americans should be protected from unsafe or ineffective systems; should not face discrimination by algorithms and systems should be used as designed in an equitable way; should be protected from abusive data practices through built-in protections and have agency over their data. Citizens should also know that an automated system is being used on them and understand how it contributes to outcomes. Finally, people should always be able to opt out of AI systems for a human alternative and have access to remedies to problems.

“We want to make sure that we are protecting people from the worst harms of this technology, no matter the specific underlying technological process used,” a second senior administration official said.